In The Beginning...

The history of computers starts out about 2000

years ago, at the birth of the abacus, a wooden rack holding two horizontal wires with

beads strung on them. When these beads are moved around, according to programming rules memorized by the user, all regular arithmetic problems can

be done. Another important invention around the same time was the Astrolabe, used for navigation.Blaise Pascal is

usually credited for building the first digital computer in 1642. It

added numbers entered with dials and was made to help his father, a tax

collector. In 1671, Gottfried Wilhelm von Leibniz invented a computer that was

built in 1694. It could add, and, after changing some things around, multiply. Leibniz invented a special stepped gear mechanism for introducing

the addend digits, and this is still being used.

The prototypes made by Pascal and Leibniz were not used in many places, and considered

weird until a little more than a century later, when Thomas of Colmar (A.K.A.

Charles Xavier Thomas) created the first successful mechanical calculator that could add, subtract, multiply, and divide. A

lot of improved desktop calculators by many inventors followed, so that by

about 1890, the range of improvements included:

l Accumulation of partial

results

l Storage and automatic

reentry of past results (A memory function)

l Printing of the results

Each of these required manual installation. These

improvements were mainly made for commercial users, and not for the needs of

science.

Babbage Babbage

While Thomas of Colmar was developing the desktop calculator, a series of very interesting developments in

computers was started in Cambridge, England, by Charles Babbage (left, of which the computer store "Babbages" is named), a mathematics professor. In 1812, Babbage realized that many long calculations, especially those needed to

make mathematical tables, were really a series of predictable actions that were

constantly repeated. From this he suspected that it should be possible to do

these automatically.

He began to design an automatic mechanical

calculating machine, which he called a difference engine. By 1822, he had a working model to demonstrate with. With

financial help from the British government, Babbage started fabrication of a

difference engine in 1823. It was intended to be steam powered and fully

automatic, including the printing of the resulting tables, and commanded by a

fixed instruction program. The difference engine, although having limited

adaptability and applicability, was really a great advance. Babbage continued to work on it for the next 10 years, but in 1833 he

lost interest because he thought he had a better idea -- the

construction of what would now be called a general purpose, fully

program-controlled, automatic mechanical digital computer. Babbage called this idea an Analytical Engine. The ideas of this

design showed a lot of foresight, although this couldn’t be appreciated until a

full century later. The plans for this engine required an identical decimal

computer operating on numbers of 50 decimaldigits (or words) and having a

storage capacity (memory) of 1,000 such digits. The built-in operations were

supposed to include everything that a modern general - purpose computer would

need, even the all important Conditional Control Transfer Capability that

would allow commands to be executed in any order, not just the order in which

they were programmed. The analytical engine was soon to use punched cards (similar to those used in a Jacquard loom), which would be read

into the machine from several different Reading Stations. The machine

was supposed to operate automatically, by steam power, and require only one

person there.

Babbage's computers were never finished. Various reasons are used for

his failure. Most used is the lack of precision machining techniques at the

time. Another speculation is that Babbage was working on a solution of

a problem that few people in 1840 really needed to solve. After Babbage, there was a temporary loss of interest in automatic digital

computers.

Between 1850 and 1900 great advances were made in

mathematical physics, and it came to be known that most observable dynamic

phenomena can be identified by differential equations (which meantthat most events occurring in nature can be measured

or described in one equation or another), so that easy means for their

calculation would be helpful.

Moreover, from a practical view, the availability

of steam power caused manufacturing (boilers), transportation (steam engines

and boats), and commerce to prosper and led to a period of a lot of engineering

achievements. The designing of railroads, and the making of steamships, textile

mills, and bridges required differential

calculus to determine such things as:

l center of gravity

l center of buoyancy

l moment of inertia

l stress distributions

Even the assessment of the power output of a

steam engine needed mathematical integration. A strong need thus developed for

a machine that could rapidly perform many repetitive calculations.

Use of Punched Cards by Hollerith Use of Punched Cards by Hollerith

A step towards automated computing was the

development of punched cards, which were first successfully used with computers

in 1890 by Herman Hollerith (left) and James Powers, who worked for

the US. Census Bureau. They developed devices that could read the

information that had been punched into the cards automatically, without human

help. Because of this, reading errors were reduced dramatically, work flow increased,

and, most importantly, stacks of punched cards could be used as easily

accessible memory of almost unlimited size. Furthermore, different problems

could be stored on different stacks of cards and accessed when needed.

These advantages were seen by commercial

companies and soon led to the development of improved punch-card using

computers created by International Business

Machines (IBM), Remington (yes, the same people that make shavers), Burroughs, and other

corporations. These computers used electromechanical devices in which electrical

power provided mechanical motion -- like turning the wheels of an adding

machine. Such systems included features to:

l feed in a specified number

of cards automatically

l add, multiply, and sort

l feed out cards with punched

results

As compared to today’s machines, these computers

were slow, usually processing 50 - 220 cards per minute, each card holding

about 80 decimal numbers (characters). At the time, however, punched cards were

a huge step forward. They provided a means of I/O, and memory storage on a huge

scale. For more than 50 years after their first use, punched card machines did

most of the world’s first business computing, and a considerable amount of the

computing work in science.

Electronic Digital Computers Electronic Digital Computers

The start of World War II produced a large need

for computer capacity, especially for the military. New weapons were made for

which trajectory tables and other essential data were needed. In 1942,

John P. Eckert, John W. Mauchly (left), and their associates at the Moore school of Electrical Engineering of

University of Pennsylvania decided

to build a high - speed

electronic computer to do the job. This machine

became known as ENIAC (Electrical Numerical Integrator And Calculator) The

size of ENIAC’s numerical "word" was 10 decimal

digits, and it could multiply two of these numbers at a rate of 300 per second,

by finding the

value of each product from a multiplication table

stored in its memory.

ENIAC was therefore about 1,000 times faster then the previous

generation

of relay computers. ENIAC used 18,000 vacuum tubes, about 1,800 square feet of floor

space,

and consumed about 180,000 watts of electrical

power. It had punched card I/O, 1 multiplier, 1 divider/square rooter, and 20

adders using decimal ring counters , which served as adders and also as quick-access

(.0002 seconds) read-write register storage. The executable instructions making

up a program were embodied in the separate "units" of ENIAC, which were plugged together to form a "route" for

the flow of information.

These connections had to be redone after each

computation, together with presetting function tables and switches. This "wire

your own" technique was inconvenient (for obvious reasons), and with only

some latitude could ENIAC be considered programmable. It was, however,

efficient in handling the particular programs for which it had been designed. ENIAC is commonly accepted as the first successful high – speed electronic

digital computer (EDC) and was used from 1946 to 1955. A controversy developed

in 1971, however, over the patentability of ENIAC's basic digital concepts,

the claim being made that another physicist, John V. Atanasoff (left ) had already used basically the same ideas in a

simpler vacuum - tube device he had built in the 1930’s while at Iowa State College. In 1973 the courts found in favor of the company using the

Atanasoff claim. These connections had to be redone after each

computation, together with presetting function tables and switches. This "wire

your own" technique was inconvenient (for obvious reasons), and with only

some latitude could ENIAC be considered programmable. It was, however,

efficient in handling the particular programs for which it had been designed. ENIAC is commonly accepted as the first successful high – speed electronic

digital computer (EDC) and was used from 1946 to 1955. A controversy developed

in 1971, however, over the patentability of ENIAC's basic digital concepts,

the claim being made that another physicist, John V. Atanasoff (left ) had already used basically the same ideas in a

simpler vacuum - tube device he had built in the 1930’s while at Iowa State College. In 1973 the courts found in favor of the company using the

Atanasoff claim.

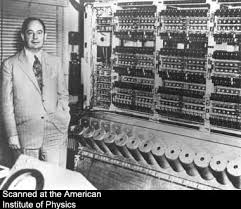

The Modern Stored Program EDC Fascinated by the

success of ENIAC, the mathematician John Von Neumann (left) undertook, in 1945, an abstract study of computation

that showed that a computer should have a very simple, fixed physical

structure , and yet be able to execute any kind of computation by means of

a proper programmed control without the need for any change in the unit

itself. The Modern Stored Program EDC Fascinated by the

success of ENIAC, the mathematician John Von Neumann (left) undertook, in 1945, an abstract study of computation

that showed that a computer should have a very simple, fixed physical

structure , and yet be able to execute any kind of computation by means of

a proper programmed control without the need for any change in the unit

itself.

Von Neumann contributed a new awareness of how practical, yet fast computers

should be organized and built. These ideas, usually referred to as the stored -

program technique, became essential for future generations of high - speed

digital computers and were universally adopted.

The Stored - Program technique involves many

features of computer design and function besides the one that it is named

after. In combination, these features make very - high - speed operation attainable.

A glimpse may be provided by considering what 1,000 operations per second

means. If each instruction in a job program were used once in consecutive

order, no human programmer could generate enough instruction to keep the

computer busy.

Arrangements must be made, therefore, for parts

of the job program (called subroutines) to be used repeatedly in a manner that

depends on the way the computation goes. Also, it would clearly be helpful if

instructions could be changed if needed during a computation to make them

behave differently. Von Neumann met these two needs by making a special type of

machine instruction, called a Conditional control transfer - which

allowed the program sequence to be stopped and started again at any point - and

by storing all instruction programs together with data in the same memory unit,

so that, when needed, instructions could be arithmetically changed in the same

way as data. As a result of these techniques, computing and programming became

much faster, more flexible, and more efficient with work. Regularly used

subroutines did not have to be reprogrammed for each new program, but could be

kept in "libraries" and read into memory only when needed. Thus, much

of a given program could be assembled from the subroutine library. The all -

purpose computer memory became the assembly place in which all parts of a long computation

were kept, worked on piece by piece, and put together to form the final

results. The computer control survived only as an "errand runner" for

the overall process. As soon as the advantage of these techniques became clear,

they became a standard practice.

|